Enterprise Database & Data Engineering Solutions

Transform your organization with scalable, high-performance data platforms that deliver actionable insights and drive innovation.

-- Fact table for sales analytics

CREATE TABLE fact_sales (

sale_id BIGINT IDENTITY(1,1) PRIMARY KEY,

date_id DATE NOT NULL REFERENCES dim_date(date_id),

product_id INTEGER NOT NULL REFERENCES dim_product(product_id),

customer_id INTEGER NOT NULL REFERENCES dim_customer(customer_id),

quantity INTEGER NOT NULL,

unit_price DECIMAL(10,2) NOT NULL,

discount_pct DECIMAL(5,2) NOT NULL,

sales_amount DECIMAL(12,2) NOT NULL,

profit_amount DECIMAL(12,2) NOT NULL

)

DISTKEY(customer_id)

SORTKEY(date_id);

-- Create materialized view for reporting

CREATE MATERIALIZED VIEW mv_monthly_sales AS

SELECT

d.year,

d.month,

p.category,

SUM(f.sales_amount) AS total_sales

FROM

fact_sales f

JOIN dim_date d ON f.date_id = d.date_id

JOIN dim_product p ON f.product_id = p.product_id

GROUP BY

d.year, d.month, p.category;Our Data Engineering Solutions

End-to-end data solutions that help you collect, process, store, and analyze your data effectively.

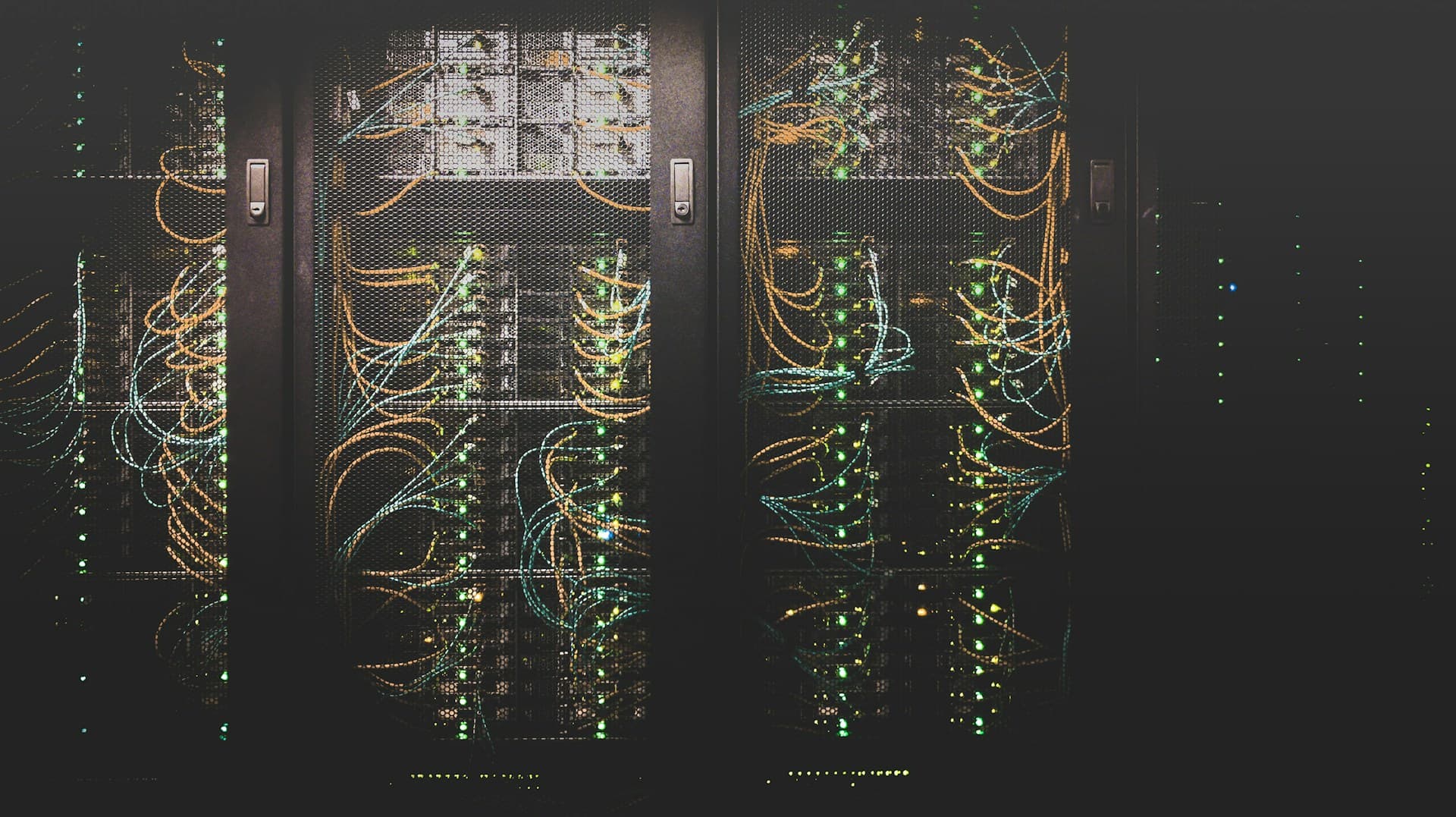

Modern Database Architecture

Design and implementation of scalable database systems that support high-throughput operations while maintaining data integrity and security.

- Polyglot persistence strategy

- Multi-region data replication

- Automated backup and recovery

- Performance optimization

- High availability configuration

apiVersion: databases.spotahome.com/v1

kind: RedisFailover

metadata:

name: analytics-cache

namespace: data

spec:

redis:

replicas: 3

resources:

requests:

cpu: 100m

memory: 512Mi

limits:

cpu: 500m

memory: 1Gi

storage:

persistentVolumeClaim:

metadata:

name: redis-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

sentinel:

replicas: 3

resources:

requests:

cpu: 100m

memory: 256Mi

limits:

cpu: 300m

memory: 500Mi

---

apiVersion: acid.zalan.do/v1

kind: postgresql

metadata:

name: analytics-postgres

namespace: data

spec:

teamId: "data-engineering"

volume:

size: 100Gi

numberOfInstances: 3

users:

app_user: []

reporting_user:

- READONLY

databases:

analytics_db: app_user

postgresql:

version: "14"

parameters:

shared_buffers: "1GB"

max_connections: "200"

work_mem: "16MB"Automated Data Pipelines

End-to-end ETL/ELT pipelines that reliably extract, transform, and load data at scale for analytics and machine learning applications.

- Real-time and batch processing

- Data quality validation

- Incremental loading patterns

- Metadata management

- Error handling and alerting

from datetime import datetime, timedelta

from airflow import DAG

from airflow.operators.python import PythonOperator

from airflow.providers.postgres.operators.postgres import PostgresOperator

from airflow.providers.amazon.aws.transfers.s3_to_redshift import S3ToRedshiftOperator

default_args = {

'owner': 'data-engineering',

'depends_on_past': False,

'email_on_failure': True,

'email': ['data-alerts@example.com'],

'retries': 2,

'retry_delay': timedelta(minutes=5)

}

with DAG(

'sales_data_etl',

default_args=default_args,

description='ETL pipeline for sales data',

schedule_interval='0 2 * * *',

start_date=datetime(2023, 1, 1),

catchup=False,

tags=['sales', 'production']

) as dag:

validate_source_data = PythonOperator(

task_id='validate_source_data',

python_callable=validate_data_quality,

op_kwargs={'schema': 'sales', 'table': 'transactions'}

)

extract_and_transform = PostgresOperator(

task_id='extract_and_transform',

postgres_conn_id='postgres_oltp',

sql='''

SELECT

date_trunc('day', created_at) as sale_date,

product_id,

customer_id,

region_id,

SUM(quantity) as units_sold,

SUM(price * quantity) as revenue

FROM

sales.transactions

WHERE

created_at >= '{{ ds }}' AND created_at < '{{ tomorrow_ds }}'

GROUP BY

date_trunc('day', created_at),

product_id,

customer_id,

region_id;

''',

autocommit=True

)

load_to_warehouse = S3ToRedshiftOperator(

task_id='load_to_warehouse',

schema='analytics',

table='daily_sales',

s3_bucket='data-warehouse-landing',

s3_key='sales/{{ ds }}/daily_extract.csv',

redshift_conn_id='redshift_warehouse',

copy_options=['CSV', 'IGNOREHEADER 1']

)

validate_source_data >> extract_and_transform >> load_to_warehouseModern Data Warehousing

High-performance analytical data stores designed for complex queries and business intelligence, providing a unified view of your organization's data.

- Star and snowflake schema design

- Columnar storage optimization

- Query performance tuning

- Data partitioning strategies

- Materialized view management

-- Dimension table for products

CREATE TABLE dim_product (

product_id INTEGER PRIMARY KEY,

product_name VARCHAR(255) NOT NULL,

category VARCHAR(100) NOT NULL,

subcategory VARCHAR(100) NOT NULL,

brand VARCHAR(100) NOT NULL,

unit_cost DECIMAL(10,2) NOT NULL,

unit_price DECIMAL(10,2) NOT NULL,

effective_date DATE NOT NULL,

expiration_date DATE,

is_current BOOLEAN NOT NULL

)

DISTKEY(product_id)

SORTKEY(is_current, effective_date);

-- Dimension table for customers

CREATE TABLE dim_customer (

customer_id INTEGER PRIMARY KEY,

customer_name VARCHAR(255) NOT NULL,

email VARCHAR(255),

segment VARCHAR(50) NOT NULL,

country VARCHAR(50) NOT NULL,

region VARCHAR(50) NOT NULL,

city VARCHAR(100) NOT NULL,

postal_code VARCHAR(20),

effective_date DATE NOT NULL,

expiration_date DATE,

is_current BOOLEAN NOT NULL

)

DISTKEY(customer_id)

SORTKEY(is_current, effective_date);

-- Dimension table for dates

CREATE TABLE dim_date (

date_id DATE PRIMARY KEY,

day INTEGER NOT NULL,

month INTEGER NOT NULL,

year INTEGER NOT NULL,

quarter INTEGER NOT NULL,

day_of_week INTEGER NOT NULL,

day_name VARCHAR(10) NOT NULL,

month_name VARCHAR(10) NOT NULL,

is_weekend BOOLEAN NOT NULL,

is_holiday BOOLEAN NOT NULL,

fiscal_year INTEGER NOT NULL,

fiscal_quarter INTEGER NOT NULL

)

SORTKEY(date_id);

-- Fact table for sales

CREATE TABLE fact_sales (

sale_id BIGINT IDENTITY(1,1) PRIMARY KEY,

date_id DATE NOT NULL REFERENCES dim_date(date_id),

product_id INTEGER NOT NULL REFERENCES dim_product(product_id),

customer_id INTEGER NOT NULL REFERENCES dim_customer(customer_id),

quantity INTEGER NOT NULL,

unit_price DECIMAL(10,2) NOT NULL,

discount_pct DECIMAL(5,2) NOT NULL,

sales_amount DECIMAL(12,2) NOT NULL,

profit_amount DECIMAL(12,2) NOT NULL

)

DISTKEY(customer_id)

SORTKEY(date_id);

-- Create a materialized view for common reporting needs

CREATE MATERIALIZED VIEW mv_monthly_sales_by_category AS

SELECT

d.year,

d.month,

d.month_name,

p.category,

COUNT(DISTINCT f.customer_id) AS unique_customers,

SUM(f.quantity) AS total_units,

SUM(f.sales_amount) AS total_sales,

SUM(f.profit_amount) AS total_profit

FROM

fact_sales f

JOIN dim_date d ON f.date_id = d.date_id

JOIN dim_product p ON f.product_id = p.product_id

WHERE

p.is_current = true

GROUP BY

d.year, d.month, d.month_name, p.category;Analytics & Business Intelligence

End-to-end business intelligence solutions that transform raw data into actionable insights through intuitive dashboards and visualizations.

- Interactive dashboard development

- Embedded analytics

- Automated reporting

- Natural language querying

- Predictive analytics integration

version: 2

models:

- name: sales_metrics

description: "Core metrics for the sales department"

columns:

- name: date

description: "Date of sales activity"

tests:

- not_null

- name: product_id

description: "Product identifier"

tests:

- not_null

- relationships:

to: ref('dim_products')

field: product_id

- name: revenue

description: "Total revenue for the day and product"

tests:

- not_null

- positive_value

metrics:

- name: total_revenue

label: Total Revenue

model: ref('sales_metrics')

description: "Total revenue across all products"

type: sum

sql: revenue

timestamp: date

time_grains: [day, week, month, quarter, year]

dimensions:

- product_category

- region

- customer_segment

filters:

- field: status

operator: '='

value: "'completed'"

meta:

format: "$###,###,###"

- name: average_order_value

label: Average Order Value

model: ref('orders')

description: "Average value of orders"

type: average

sql: order_total

timestamp: ordered_at

time_grains: [day, week, month, quarter, year]

dimensions:

- customer_segment

- region

- acquisition_source

meta:

format: "$###,###.00"Data Engineering Benefits

Our data solutions deliver tangible business value through efficient data management and powerful analytics capabilities.

Enhanced Data Availability

Design high-availability architectures that ensure your critical data is accessible when needed with minimal downtime.

Optimized Performance

Fine-tune your databases and data pipelines for maximum throughput, minimal latency, and efficient resource utilization.

Data Governance & Compliance

Implement robust data governance frameworks ensuring data quality, security, and regulatory compliance across the organization.

Scalable Data Infrastructure

Build data platforms that scale elastically with your business growth, from gigabytes to petabytes without architectural redesign.

Real-time Analytics

Enable real-time insights from streaming data to power dashboards, alerts, and operational intelligence for immediate action.

Unified Data Access

Create a single source of truth across your organization with unified data platforms and seamless integration between systems.

Our Database & Data Engineering Technology Stack

We leverage industry-leading technologies to build robust, scalable database solutions and efficient data pipelines.

Database Technologies

PostgreSQL

Robust relational database with advanced features for enterprise applications

MongoDB

Flexible NoSQL database for modern, document-based data models

Snowflake

Cloud data platform for unified analytics and data warehousing

Apache Kafka

High-throughput distributed streaming platform for real-time data pipelines

Data Engineering Tools

Apache Spark

Unified analytics engine for large-scale data processing

Apache Airflow

Platform to programmatically author, schedule and monitor workflows

Data Warehousing

Solutions for structured data storage and business intelligence

ETL/ELT Pipelines

Custom data integration pipelines for seamless data movement

Frequently Asked Questions

Common questions about our database and data engineering services.

Case Studies

See how our data engineering expertise has solved real-world challenges across industries.

Financial Fraud Detection System

How we engineered a scalable data pipeline and analytics platform to process millions of transactions for fraud detection.

Read case studyReady to Transform Your Data Infrastructure?

Let's discuss how our data engineering expertise can help you build scalable, high-performance data solutions.